How many times have you read “X result is statistically significant” but wondered—what does this really mean? Does it actually tell you something you can base a decision on? OR is it just one part of the evidence picture? Let’s walk through the key points to consider together.

What does statistically significant mean?

Let’s first talk about the “statistic” in statistically significant. To do this, we need to consider how the scientific community creates new knowledge and answers important questions. Suppose policymakers want to find out if a new intervention is better than the traditional approach. This information will help guide their funding decisions. To answer this question, they decide to set up a research study that uses hypothesis testing.

Hypothesis testing allows you to directly compare two statements and understand which one is more likely to be true in a given population. In this study, the “null hypothesis” is that there is no difference between the traditional and new interventions. The “alternative hypothesis” is that the new intervention is better than the traditional approach. Next, they would run statistical tests to see which group had better outcomes.

What is a p-value?

Now, we can get to the “significant” part. The statistical test will most likely produce something called a probability value or p-value. The p-value tells you how likely the result you are observing is real (and not due to chance). P-values are always a number between 0 – 1 (or probability between 0% to 100%) and the smaller the number, the more likely the result you are seeing is real. The conventional wisdom is that a p-value under 0.05 (or 5%) means you can trust the result. Or in other words, there’s less than a 5% chance that the difference between our two groups is just random error. Anything above 0.05 is considered not “statistically significant” and is usually disregarded.

In practice, most people just pay attention to the p-value when drawing conclusions from a research study. So what’s the problem then? As I alluded to in the opening paragraph, p-values only give you part of the evidence picture.

Three things to keep in mind when using statistics to make decisions

1. The p-value is not enough to make a decision

Many scientists argue that the 0.05 cut-off point is arbitrary and misleading. There is even a movement within the scientific community to abolish it outright. Despite these limitations, I think p-values can still give us important information, but they are just one ingredient in the evidence recipe. So how can we use them better?

2. Effect sizes tell you what is actually important

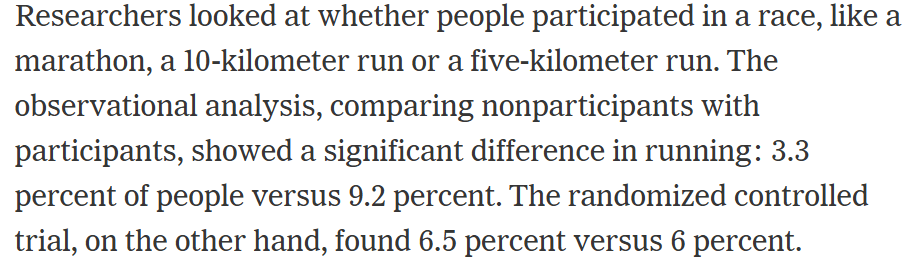

And this is where the effect size comes in. Effect sizes show the measurable differences between the two groups in your study. They are important because they offer a clear number that’s usually easy to interpret (some effect sizes, like the odds ratio, can be tricky to understand). Let’s look at a concrete example. In this New York Times article, the author explores the benefits of real-world randomized control trials (RCTs). Evaluating the effectiveness of workplace wellness programs, the article said:

This article demonstrates how clear and intuitive effect sizes can be. It is clear that the RCT showed a much smaller effect than the observational study (12 times smaller, in fact). Yet, what it does not tell us is whether the effect that the RCT found is meaningful.

3. The difference or change in effect size should be meaningful in the real world

The final piece to our evidence picture is putting the effect size into context. In the New York Times example, we need to ask ourselves: does half a percent matter? Again, it depends. How many employees participated in the wellness program? How much does the wellness program cost per employee? What positive outcomes does participating in the wellness program lead to? If the company was large, the wellness program cost was low, and participating led to positive outcomes, then half a percent may matter. If only some of these things were true, as this study showed, then half a percent might not mean much. This is the case even if the difference was statistically significant.

Key Takeaway

As an evidence user, you should look at the entire evidence picture. The effect size will tell you more than just a p-value. Consider the policy or implementation context when deciding if the effect sizes are meaningful.

Citations

Carrol, Aaron. “Workplace Wellness Programs Don’t Work Well. Why Some Studies Show Otherwise”. The New York Times. August 6, 2018

Other Resources

- A good article that describes how to interpret the not so intuative effect sizes

Leave a comment